Zaiqiang Wu*, Jingyuan Liu* Long Hin Toby Chong, I-Chao Shen, Takeo Igarashi, Virtual Measurement Garment for Per-Garment Virtual Try-On, Graphics Interface 2024.

Paper: [PDF, 8.8MB]

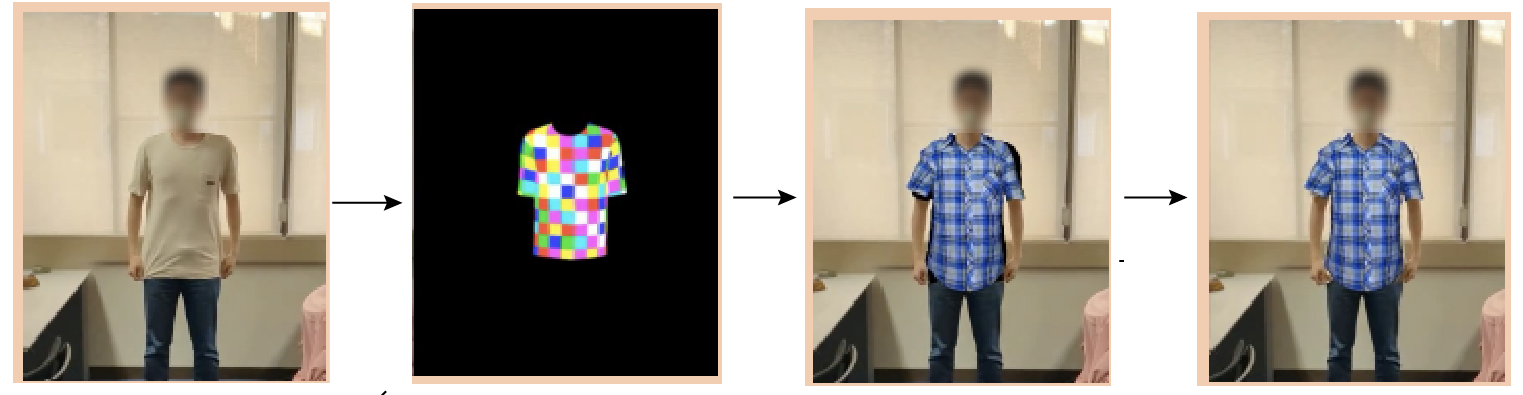

Our proposed method eliminates the need for wearing a physical measurement garment by introducing a virtual measurement garment. Additionally, we add a gap-filling module to improve the realism of the synthesized try-on image. By combining these features with a robust segmentation network, our proposed pipeline enables users to perform high-quality virtual try-on in more diverse environments.

Zaiqiang Wu*, Jingyuan Liu* Long Hin Toby Chong, I-Chao Shen, Takeo Igarashi, Virtual Measurement Garment for Per-Garment Virtual Try-On, Graphics Interface 2024.

Paper: [PDF, 8.8MB]

The popularity of virtual try-on methods has increased in recent years as they allow users to preview the appearance of garments on themselves without physically wearing them. However, existing image-based methods for general virtual try-on provide limited support to synthesize realistic and consistent garment images under different poses, due to two main difficulties: 1) the dataset used to train these methods contains a vast collection of garments, but they lack fine details of each garment; 2) they synthesize results by warping the front-view image of the target garment in a rest pose, which results in poor quality and detail for other viewpoints and poses. To overcome these drawbacks, per-garment virtual try-on methods train garment-specific networks that can produce high-quality results with fine-grained details for a particular target garment. However, existing per-garment virtual try-on methods require the use of a physical measurement garment, which limits their applicability. In this paper, we propose a novel per-garment virtual try-on method that leverages a virtual measurement garment, which eliminates the need for the physical measurement garment, to guide the synthesis of high-quality and temporally consistent garment images under various poses. Furthermore, we introduce a gap-filling module that effectively fills the gap between the synthesized garment and body parts. We conduct qualitative and quantitative evaluations against a state-of-the-art image-based virtual try-on method and ablation studies to demonstrate that our method achieves superior performance in terms of realism and consistency of the generated garment images.

@article{ wu2023wmvton,

author = {Zaiqiang Wu and Jingyuan Liu and Toby Chong and I-Chao Shen and Takeo Igarashi},

title = {Virtual Measurement Garment for Per-Garment Virtual Try-On},

journal = {Graphics Interface},

year = {2024}

}